AILIA: Artificial Intelligence Laboratory for Image Analysis

During my last engineering year as a student, in Paris, I created an Artificial Intelligence Laboratory for Image Analysis (AILIA) which is used to process images but also generate Deep Learning based predictions on them. The goal was to measure the effect of image processing on state of the art models and to analyze the relevance of their predictions. The compatible models are CNNs based models which work best for image manipulation (eg. ResNet, Inception, VGG etc.). This platform comes with a RESTful API, made using Flask.

Overview

Understanding Neural Network can be hard at first. Thus, AILIA provides tools to process and classify unknown images in a minimal and unique application.

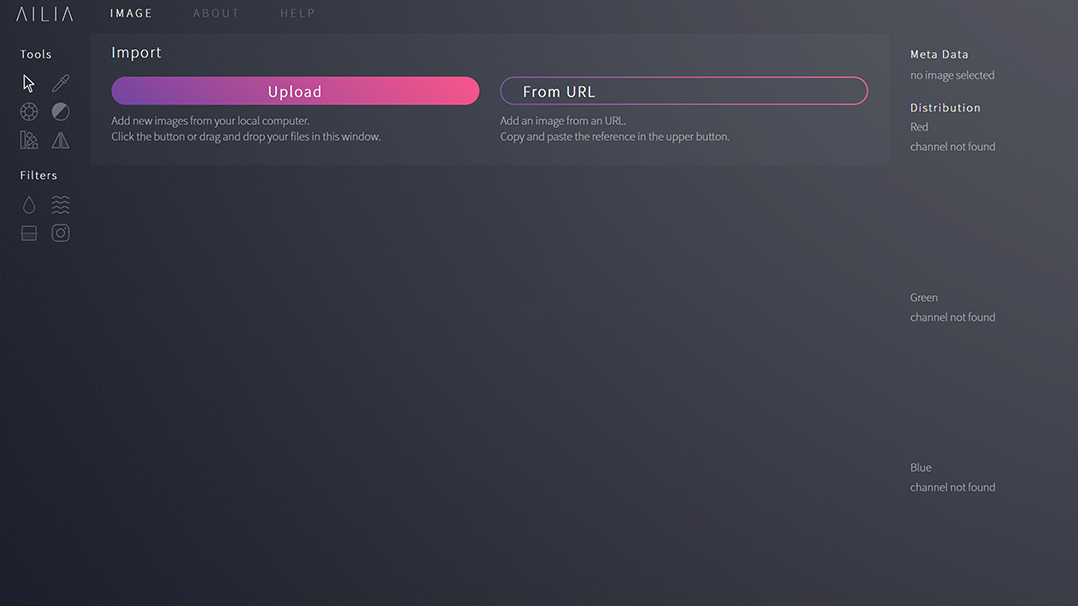

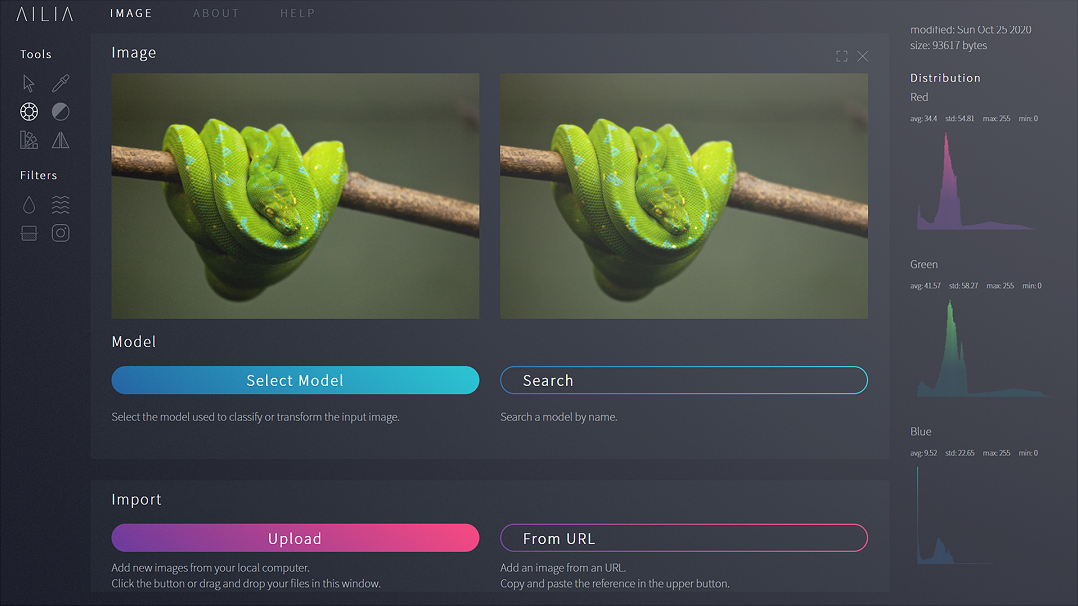

Import your images

You can upload images from your computer, or provide an url to an online image. Import as many images as you want, they will appear in the central panel. The meta data and channel distribution appear in the right panel, to keep track of the selected image.

To import an image, simply click on the Import button. You can also provide an URL to import it on AILIA platform.

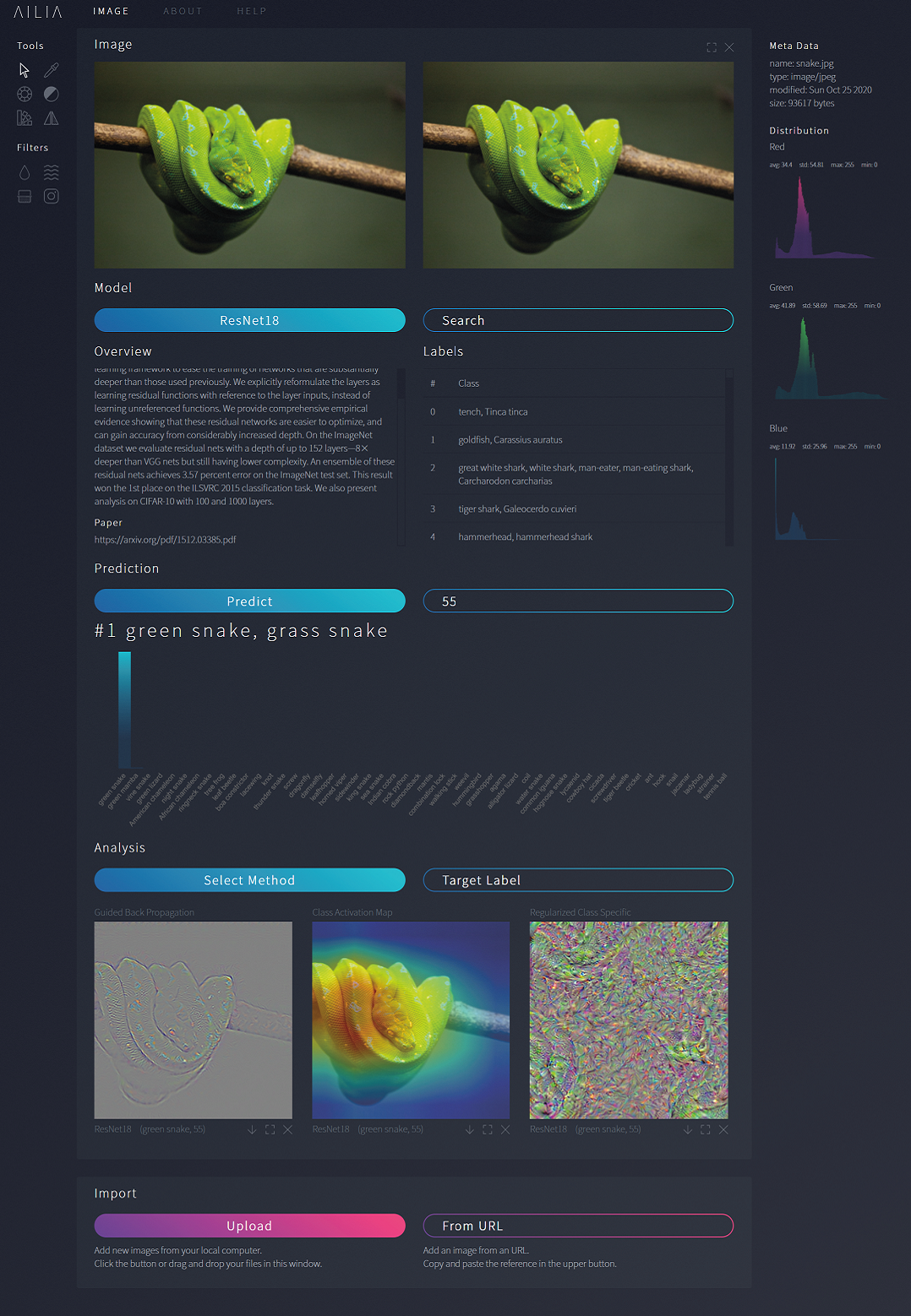

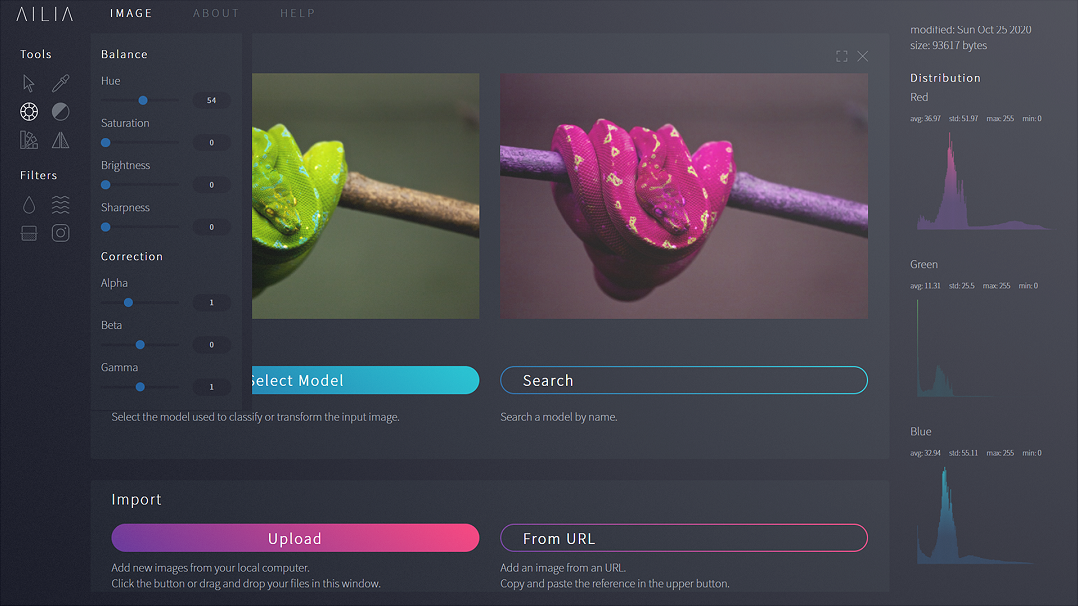

Use custom filters

To trick state of the art models, you can play with filters or tones of the image. Changing a single parameter can totally change the model's output, so feel free to explore all combinations of color grading!

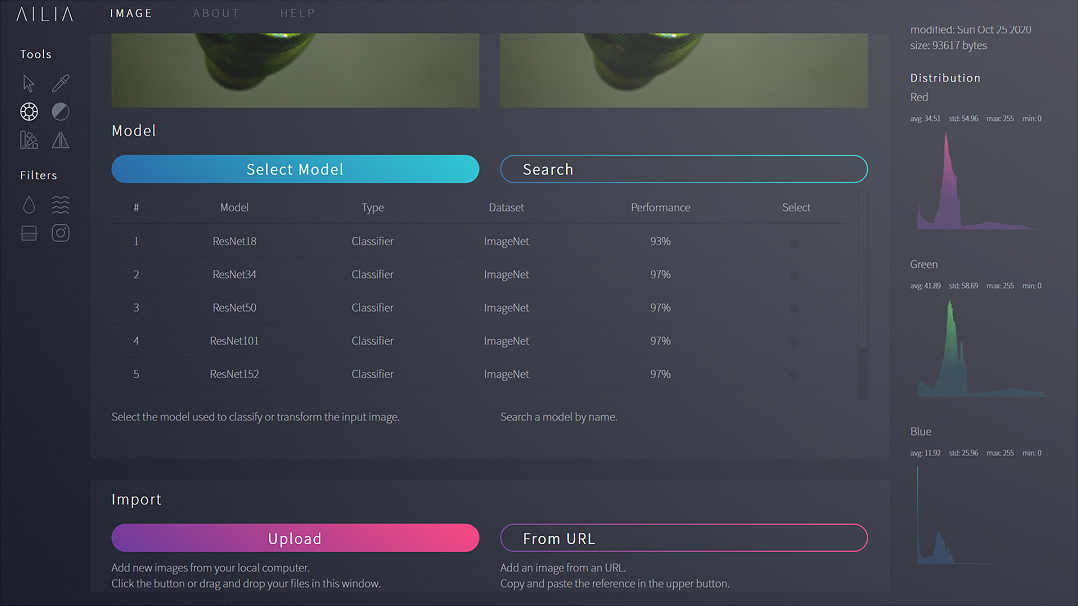

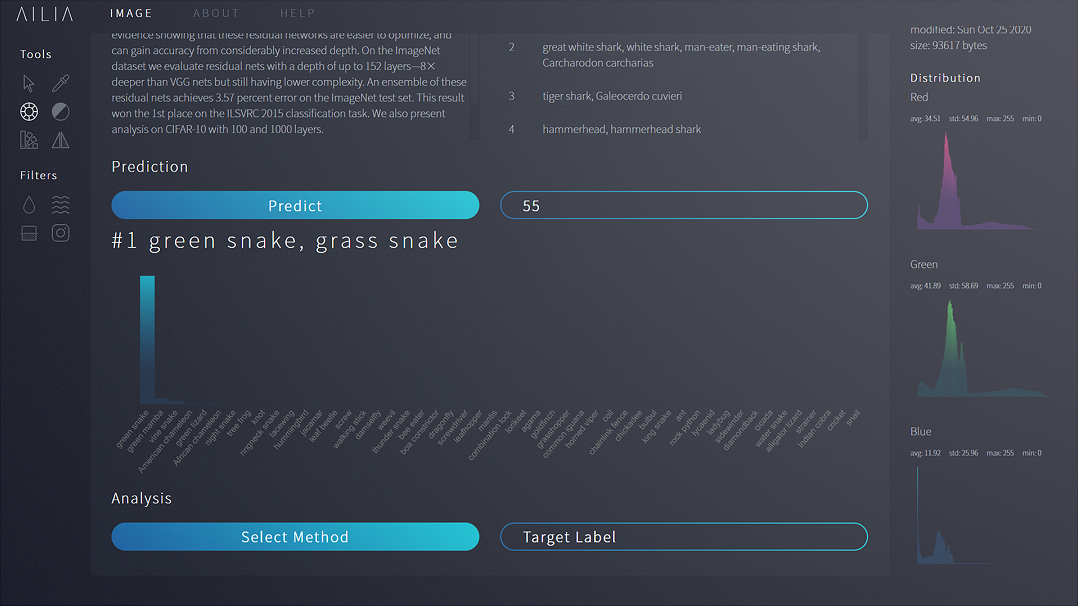

Classify your images

The final step is to classify the modified image. Thus, AILIA provides state of the art models, trained on the ubiquitous dataset ImageNet containing over 1,000 classes.

To select the model that will be used for classification tasks, click on the Select Model button.

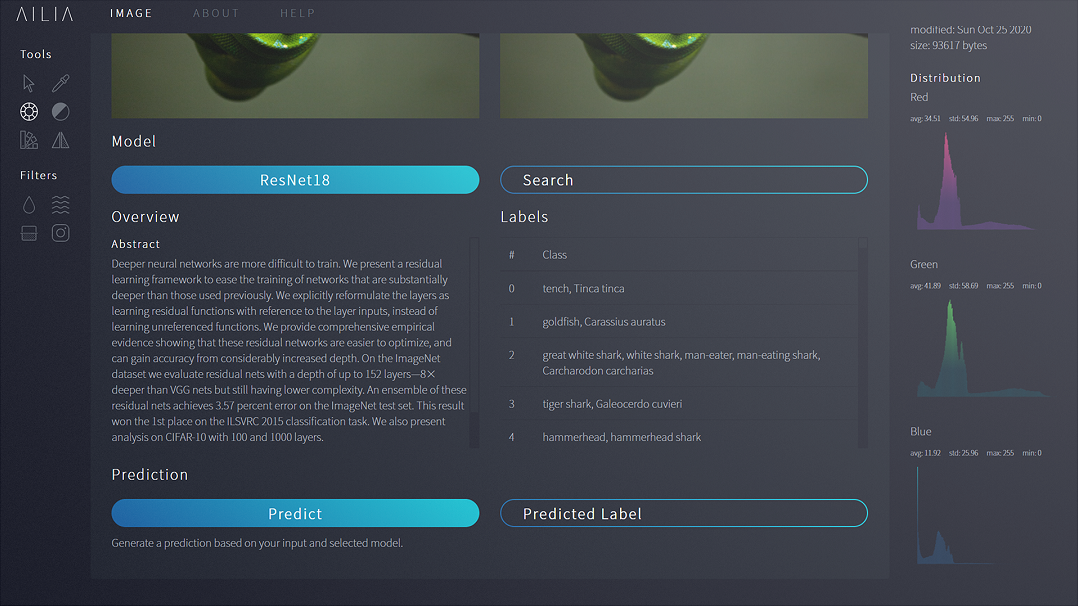

Once selected, you will have an overview of the model and the dataset used during training. You will also find the original paper that describes the model's architecture.

To classify your image, click on the Predict button. The label will appear on the right, and the corresponding name under the button.

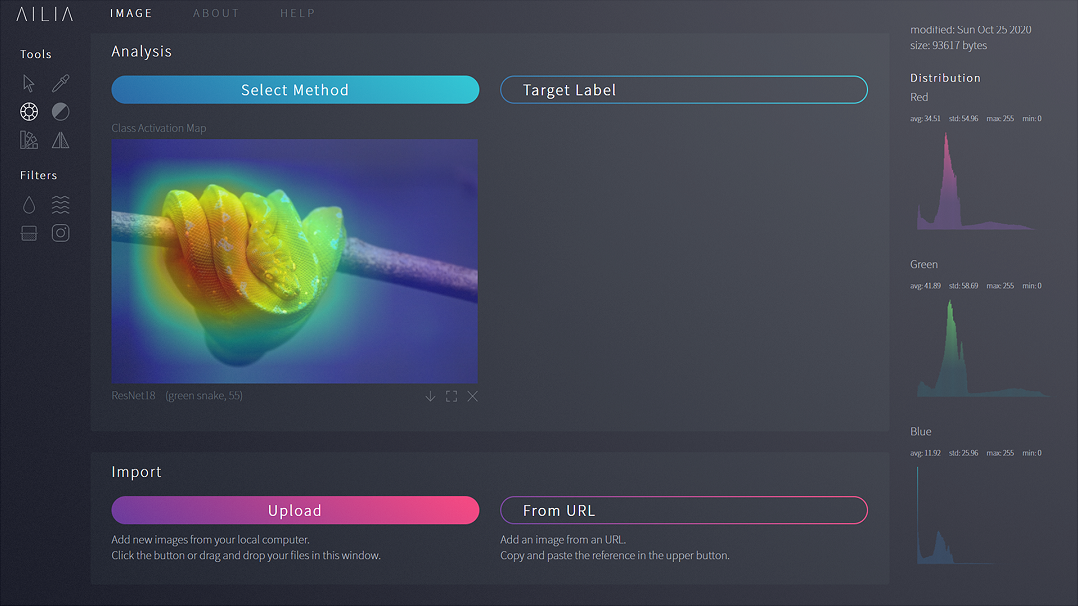

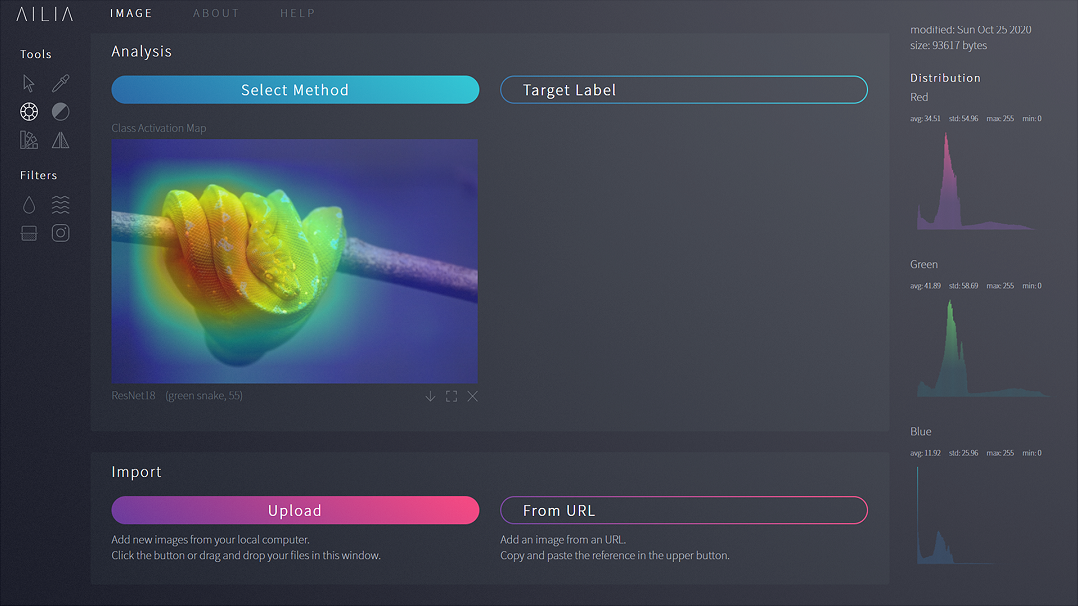

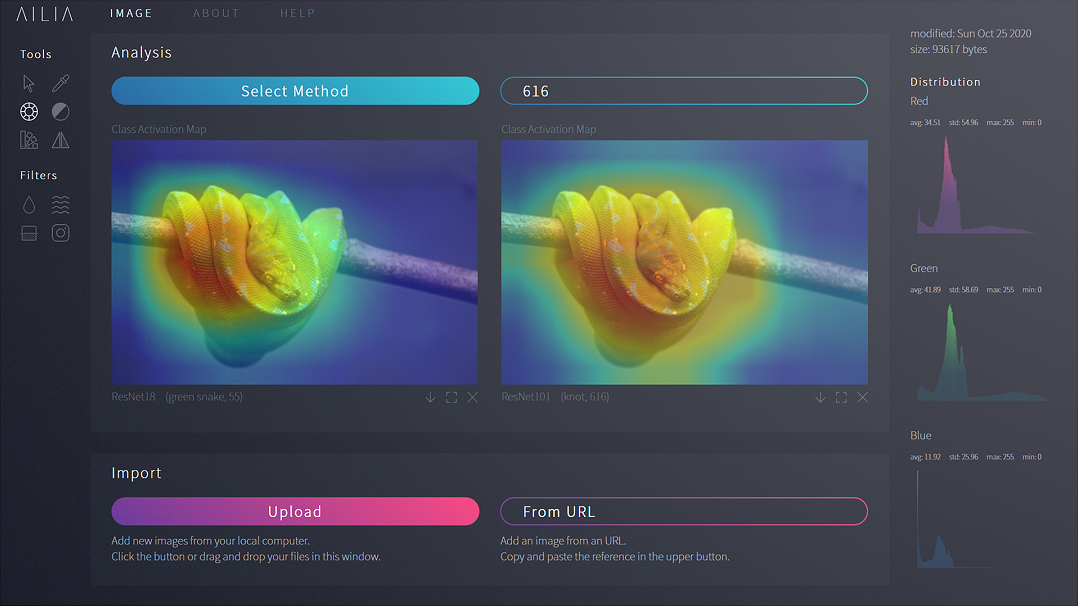

Visualize attention

To visualize the most important features (here the features extracted on the last convolutional layer), you can choose an analysis method. These methods will highlight the area on the input image that led to this prediction.

The Target Label is used to extract features regarding a specific label. For example, running a Class Activation Map (CAM) on an image classified as "Green Snake" (target 55) will highlight regions on the input image that best match a "Green Snake". Also, choosing another label like "knot" (target 616) will extract features that best corresponds to a "Knot" in the input image.

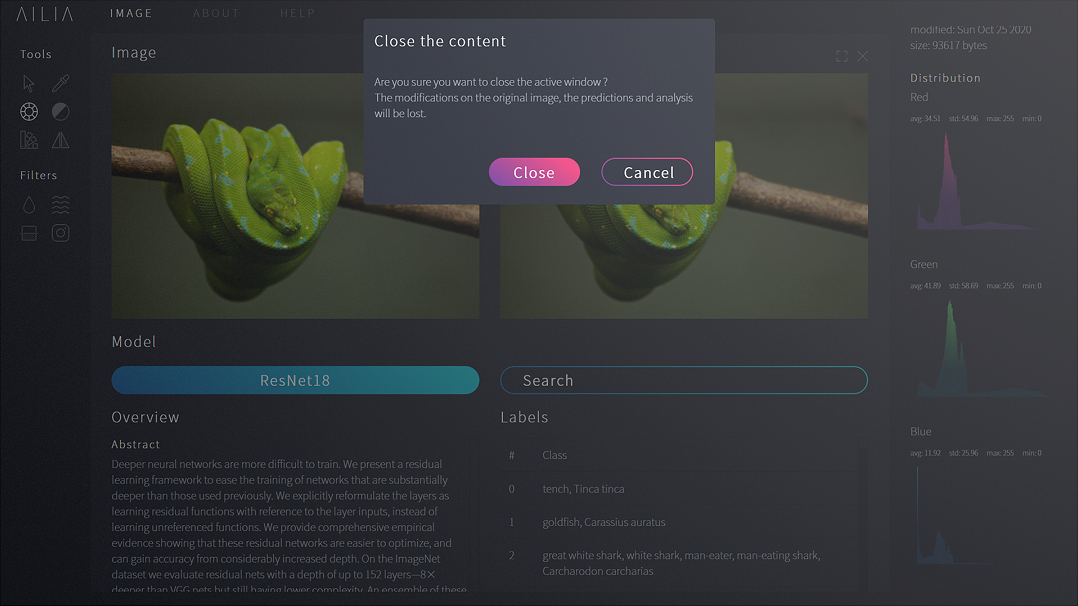

Start again

Discard your modifications, and upload a new image!

Click on the upper right cross button on the active content to delete it. Then, click on the Close button to confirm.